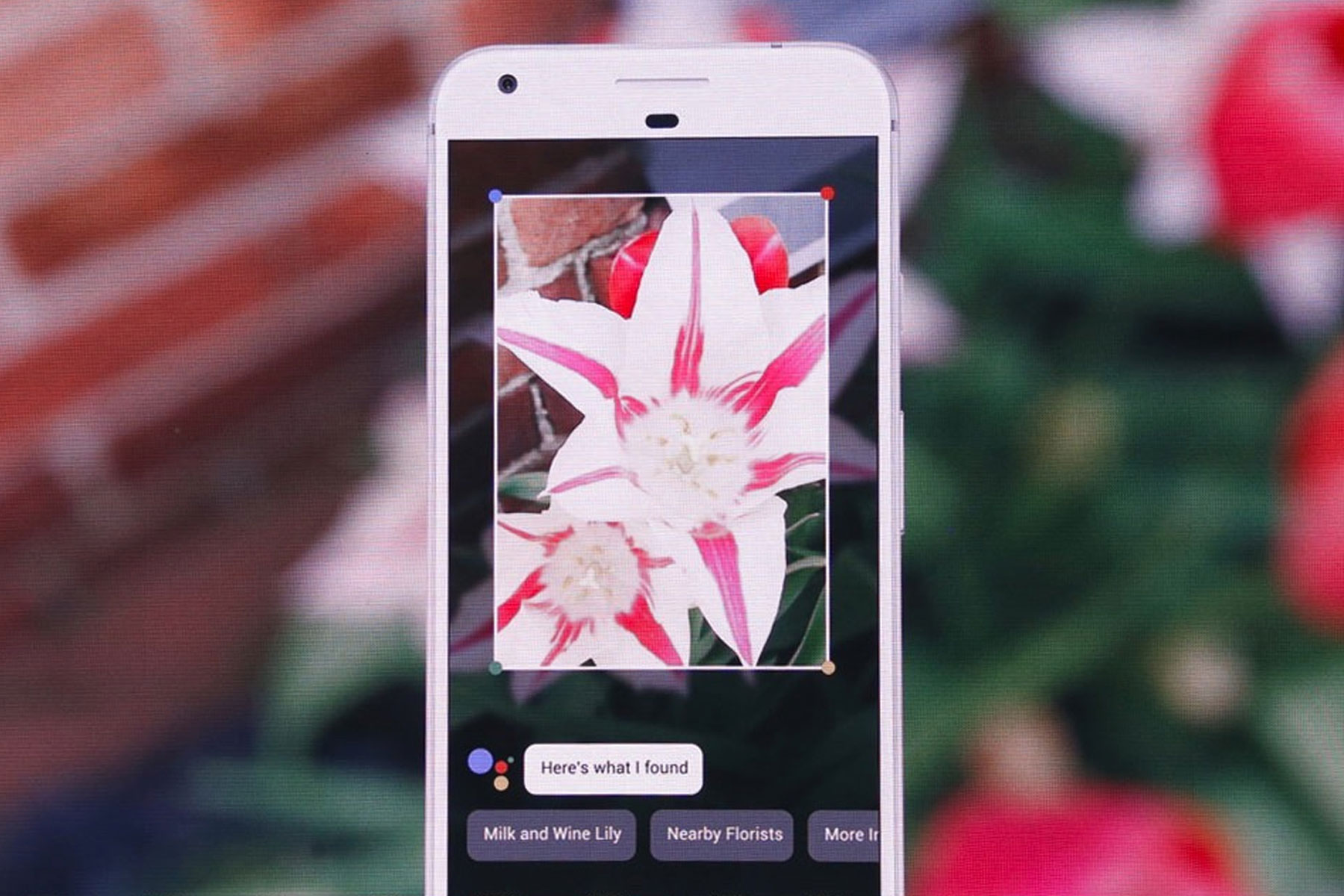

In what could be the ultimate manifestation of data utilisation, Lens will work as a search assistant providing deets about almost anything, anywhere.

The Google I/O 2017 keynote hinted that Google still has lots up its sleeve. Initially rolled out in Pixel 2 and Pixel 2 XL phones, Google Lens is the latest AI-powered feature to cause a significant bleep on our radar.

A natural extension of Google Search Image, Google Lens is injected with a healthy dose of AI for object and scene recognition. This means it could soon be available for every Android phone or tablet (supporting at least the sixth version, Marshmallow) via Google Assistant and Google Photos, turning your device into a real-time visual recognition device for objects both natural and man-made.

How it works

With Google Lens, your smartphone camera won’t just see what you see, but will also understand what you see to help you take action. #io17 pic.twitter.com/viOmWFjqk1

— Google (@Google) May 17, 2017

Say you come across a fascinating sculpture in Greece, or an interesting bistro in France. All you have to do is scan the object or landmark to pull up details from Google’s vast information archive, like the history of that sculpture or reviews of the restaurant. You can also do this retroactively with photos.

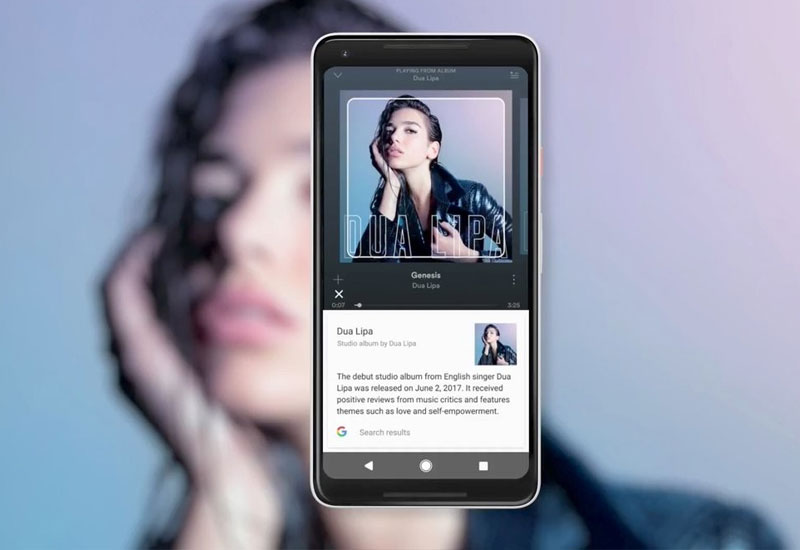

A preview version of Google Lens on latest Pixels suggests this is only the beginning of greater tech to come. So far, demonstrations have proved its competency in identifying albums, movies, and books from cover art, and e-mail addresses from a flyer ad.

You might also be interested in: Best Google releases of 2017

Room for development

In a time when images still or moving are the engines of virtual dynamism, visual identification is a tricky tool to master. Beta reviews indicate Google Lens still has a long way to go to maximum utility, as there are existing platforms and apps with contextual grasps on this sort of identification. Amazon’s visual search does a pretty decent job where cover art is concerned, while Shazam’s song identification capacity is far beyond Google’s ‘Now Playing’ feature.

Then consider Google Assistant itself – while its artificial intelligence is handy for managing your calendar or accessing a range of dad jokes, it can still be relatively clueless as a digital assistant with access to the world’s largest info tank. However, even the harshest critics agree patience is key because the payoff in this sort of tech lies in long-term implementation and development.

Big data potential

Big data potential

That’s where Google might just have an edge. While Samsung’s Bixby Vision (on Galaxy S8 and Note 8) has already had a go at visual identification, it lacked the data depth and breadth that we have come to know as Google’s trademark. Remember Google Googles? Well, Lens allows you to go one step further in recognition to understand and connect pieces of information in that good ol’ Google brain.

As far as AI goes, that can only mean it will gets smarter the more ‘neurons’ are connected. This technology is the bedrock of the latest smart camera, Google Clips, which independently snaps photos based on composition and activity. As more mainstream phone cameras adopt ARCore augmented reality platform, Google’s visual positioning system will find its way to the standard Lens feature. We hope this means Google Assistant will be able to not just tell us what things are, but where they are.

Google Lens is currently only available via Photos on Pixel and Pixel 2, but Googlers have dropped hints that Lens will find its way to Assistant within a few weeks. A built-in viewfinder in Google Assistant will make visual-recognition a real-time possibility, and it is then only a matter of time before Lens reaches our Android devices.

Images courtesy of Google and AndroidCentral.